Development and validation of an accurate smartphone application for measuring waist-to-hip circumference ratio

[ad_1]

Experimental design

The study hypothesis was tested in two phases. A smartphone application based on computer vision algorithms was developed in the first study phase. The development of this algorithm, MeasureNet, is described in the methods section that follows.

The second phase involved testing MeasureNet performance in a series of experimental studies (Supplementary Fig. 3). First, the accuracy of MeasureNet and self-measurements were compared to flexible tape measurements taken by trained staff in a sample of healthy adults referred to as the Circumference Study Dataset (CSD). Accuracy metrics are defined in the Statistical Methods section. Front-, side-, and back-view images of users were collected with a smartphone along with “ground truth” flexible tape circumference measurements taken by trained staff and by the user themselves. Circumferences were measured according to NHANES guidelines (Supplementary Note 1). MeasureNet and self-measurements were compared to the ground truth tape measurements.

A second experimental study involved comparison of MeasureNet to state-of-the-art approaches for three-dimensional (3D) shape estimation. Specifically, we compared MeasureNet, SPIN20, STRAPS21, and recent work by Sengupta et al.17 to ground truth estimates from 3D circumference made in men and women with a Vitus Smart XXL (Human Solutions North America, Cary, NC)25 laser scanner. This dataset is referred to as the Human Solutions dataset. We had front-, side-, and back-viewpoint color images, height, and body weight for each participant along with their 3D laser scan. The Skinned Multi-Person Linear (SMPL) model was fit to each 3D scan to estimate the shape and pose of the scan19. We extracted the ground truth circumferences from the fitted SMPL model at predefined locations (corresponding to hip, waist, chest, thigh, calf and bicep) as shown in Supplementary Figs. 1, 2. Third, we measured the noise in tape measurements compared to MeasureNet using data from a subset of healthy men and women evaluated in the CSD dataset. Each person was measured twice by a trained staff member (staff measurements) and two sets of images were also taken by the staff member (MeasureNet). Each person also measured themselves twice using measuring tape (self-measurements). For staff measurements, each person was measured by two different staff members to ensure minimal correlation between consecutive measurements. We used the difference between two consecutive measurements to analyze the noise distributions of staff-measurements, MeasureNet, and self-measurements.

Lastly, we compared accuracy and repeatability of our approach to the ground truth on a synthetic dataset. We created the dataset by rendering each synthetically generated mesh using different camera parameters (height, depth, focal length) and different body poses placed in front of randomly selected backgrounds. The dataset was generated using synthetic meshes of 100 men and 100 women. This data is referred to as the Synthetic Dataset. We considered all of the renderings for a particular mesh to measure repeatability (robustness) of our approach. Repeatability metrics are defined in the Statistical Methods section. Different factors such as background, camera parameters, and body pose changes were present across multiple renderings of the same mesh. A repeatable approach should ideally predict the same output for different renderings of the same mesh. We also use this dataset to evaluate accuracy given all of the renderings and their ground truth.

A flow diagram showing the multiple study human participant evaluations is presented in Supplementary Fig. 3. Consent was obtained for the collection and use of the personal data voluntarily provided by the participants during the study.

MeasureNet development

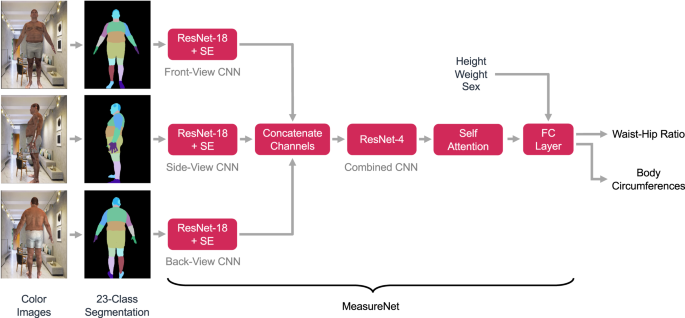

An overview of our approach for measuring WHR is shown in Fig. 3. The user inputs their height, weight, and sex into their smartphone. Voice commands from the application then guide the person to capture front-, side-, and back-viewpoint color images. The images are then automatically segmented into 23 regions such as the background, upper left leg, lower right arm, and abdomen by a specialized convolutional neural network (CNN) trained to perform semantic image segmentation. Intuitively, this step suppresses irrelevant background features, provides additional spatial context for body parts, and affords important benefits during model training, which we will discuss subsequently. The segmentation result is then passed as input along with the user’s height, weight, and sex into the MeasureNet neural network. MeasureNet then estimates the user’s WHR together with other outputs such as body shape, pose, camera position and orientation, and circumferences such as at the waist, hip, chest, thigh, calf and bicep.

Overview of the anthropometric body dimension measurement approach. The user first enters their height, weight, and sex into the smartphone application. Voice commands then position the user for capture of front, side, and back color images. The images are then segmented into semantic regions using a segmentation network. The segmentation results are then passed to a second network referred to as MeasureNet that predicts WHR and body circumferences. Each input is passed through a modified Resnet-18 network which is then concatenated and passed through Resnet-4, self-attention block and a fully connected layer (FC layer) before predicting WHR and body circumferences. Resnet-18 is modified to include Squeeze-Excitation blocks (SE). CNN, convoluted neural network. Synthetic images are used to train this model. Real images are used during inference after the model is trained. Color images shown in the figure are synthetically generated.

The MeasureNet architecture is built upon a modified Resnet-18 network26,27 that “featurizes” each of the three input segmentation images (i.e., transforms each image to a lower-dimensional representation). Features from each view are then concatenated together and fed to a Resnet-4 network and a self-attention network28 followed by a fully connected layer to predict body circumferences and WHR as illustrated in Fig. 3.

The following are the key features of the architecture that we found improved accuracy the most:

-

Direct prediction of circumferences: Predicting body circumferences directly outperformed first reconstructing the body model (3D SMPL mesh19) and then extracting measurements from it.

-

Number of input views: Using three views of the user as input improved the accuracy as compared to using one or two views of the user. Tables 2 and 3 shows the improvement in accuracy with increasing number of input views and using direct prediction of circumferences.

-

Swish vs. ReLU activations: Resnet typically uses ReLU activations26. We found that replacing ReLU with Swish activations29 reduced the percentage of “dead” connections (i.e., connections through which gradients do not flow) from around 50% with ReLU to 0% with Swish and improved test accuracy.

-

Self-Attention and Squeeze-Excitation for non-local interactions: Including squeeze-excitation blocks27 with Resnet branches for cross-channel attention and a self-attention block28 after the Resnet-4 block allowed the model to learn non-local interactions (e.g., between bicep and thigh), with further accuracy improvements. Supplementary Note 4 shows the accuracy improvements due to self-attention, squeeze-excitation and Swish activation blocks.

-

Sex-specific model: Training separate, sex-specific MeasureNet models further improved accuracy. As we show in Tables 2 and 3, sex-specific models have lower prediction errors compared to sex-neutral models.

MeasureNet predicts multiple outputs, such as body shape, pose, camera, volume, and 3D joints. Predicting multiple outputs in this way (multi-tasking) has been shown to improve accuracy for human-centric computer vision models30. Additionally, MeasureNet predicts circumferences and WHR. Some of the outputs (e.g., SMPL shape and pose parameters) are used only to regularize the model during training and are not used during inference31. The inputs and outputs to MeasureNet are shown in Fig. S4. Important MeasureNet outputs related to circumferences and WHR are:

-

Dense Measurements: MeasureNet predicts 112 circumferences defined densely over the body. Details are presented in Supplementary Note 2. Dense measurements reduce the output domain gap between synthetic and ground truth by finding the circumference ring (out of 112 circumference rings) that minimize the error between tape measurements taken by trained staff and synthetic measurements at a particular ring. The table in Supplementary Note 2 shows that the predicted error at the optimal circumference ring is the lowest and therefore it is well-aligned with the staff measurements.

-

WHR Prediction: Our model can predict WHR both indirectly (by taking ratios of waist and hip estimates) and directly (i.e., predicting WHR either through regression or classification). WHR related outputs are shown in Fig. S4. The final WHR prediction is an ensemble result, i.e., we average the individual WHR predictions. As shown in Supplementary Note 5, we found that the ensemble prediction had the lowest repeatability error (most robust) without losing accuracy as compared to individual predictions via regression, classification or taking the ratio of waist and hip.

We include training losses on shape, pose, camera, 3D joints, mesh volume, circumferences and waist-hip ratio (through classification and regression). The losses are defined in Supplementary Note 6. Since we have multiple loss functions, hand-tuning each loss weight is expensive and fragile. Based on Kendall et al.31, we used uncertainty-based loss weighting (Eq. 1) where the weight parameter \(\left({w}_{i}\right)\) is learned. Uncertainty based loss weighting automatically tunes the relative importance of each loss function \(\left({L}_{i}\right)\) based on the inherent difficulty of each task. Supplementary Note 7 shows the improvement in accuracy when using uncertainty-based loss weighting during training.

$${\mathscr{L}}=\frac{1}{{w}_{i}}\times {L}_{i}+\log \left(1+{w}_{i}\right)$$

(1)

Realistic synthetic training datasets

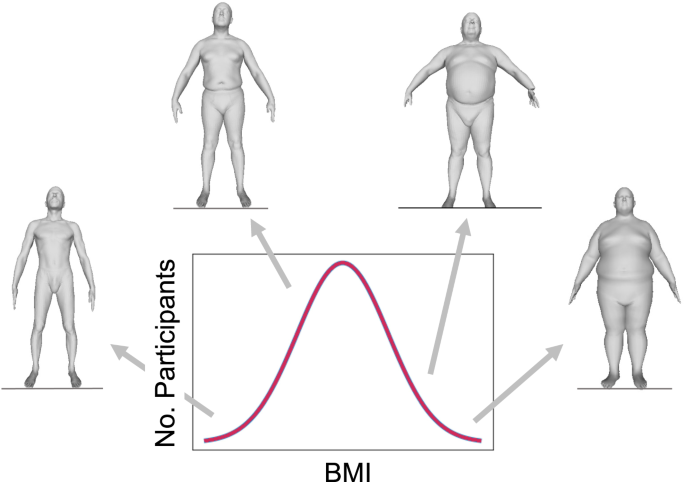

MeasureNet was trained with synthetic data. Using synthetic data helps avoid expensive, manual data collection and annotation. However, it comes at the cost of synthetic-to-real domain gap, which leads to a drop in accuracy between a model trained with synthetic data but tested on real data. We reduced the domain gap by simulating a realistic image capture process on realistic 3D bodies with lifelike appearance (texture). Examples of synthesized body shapes for different BMI values are shown in Fig. 4.

The SMPL mesh model19 is parameterized by shape and pose parameters. To encourage realism in the synthetic dataset and minimize domain gap, it was important to sample only realistic parameters and to match the underlying distribution of body shapes of the target population. Our sampling process was used to generate approximately one million 3D body shapes with ground truth measurements, and consisted of three steps:

-

Fit SMPL parameters: Given an initial set of 3500 3D scans (by a laser scanner) as a bootstrapping dataset, we first fitted the SMPL model to all scans19 to establish a consistent topology across bodies and to convert each 3D shape into a low-dimensional parametric representation. Due to the high fidelity of this dataset and the variation across participants, we used this dataset as a proxy for the North American demographic distribution of body shapes and poses.

-

Cluster samples: We recorded the sex and weight of each scanned subject, and extracted a small set of measurements from the scan, such as height, and hip, waist, chest, thigh, and bicep circumferences. We trained a sex-specific Gaussian Mixture Model (GMM) to categorize the measurements into 4 clusters (we found the optimal number of clusters using Bayesian information criterion).

-

Sample the clusters using importance sampling: Finally, we used importance sampling to match the likelihood of sampling a scan to match the distribution across all clusters. This allowed us to create a large synthetic dataset of shape and pose parameters whose underlying distribution matched the diversity of the North American population. As an additional check, we found that our dataset created using the above method closely matched the distribution of the NHANES dataset (https://www.cdc.gov/nchs/nhanes/about_nhanes.htm). NHANES was collected by the Center for Disease Control and Prevention between the years 1999 and 2020 and consists of the demographics, body composition and medical conditions of about 100,000 unique participants from North American population.

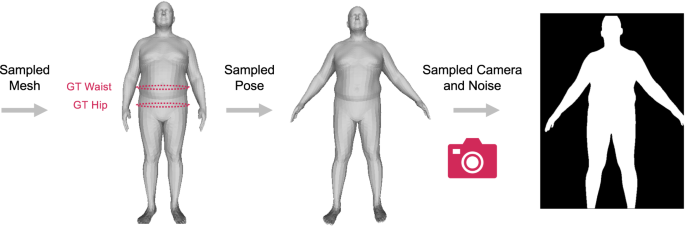

We simulated a realistic capture process by sampling across the range of all possible camera orientations (in the range of −15 to +15 degrees around each axis) that yielded valid renderings of the user in the input image. Valid renderings are images in which body shapes are visible from at least the top of the head to the knees. This ensures that the sampled camera parameters match the realistic distribution of camera parameters observed for real users. An example of realistic sampling of shape, pose, and camera are shown in Fig. 5.

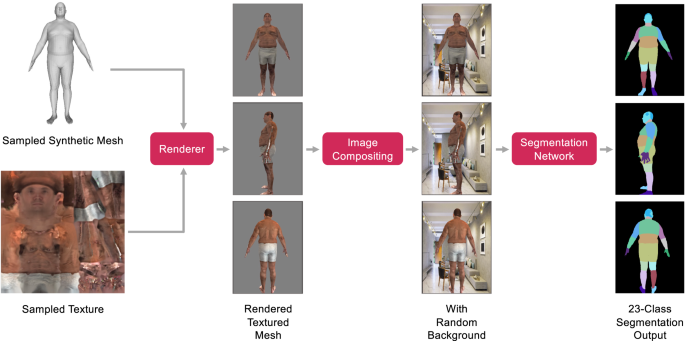

Once body shape, body pose, and camera orientation were sampled, we transferred the texture from a real person onto the 3D mesh, placed it in front of a randomly selected background image (of an indoor scene) and rendered a realistic color rendering given the camera pose. The textured and realistic color rendering was then segmented using the segmentation network that was used as an input to train MeasureNet. The ground truth targets used to train MeasureNet were extracted from sampled synthetic mesh. Transferring the texture from a real person allowed us to generate diverse and realistic samples and had two main advantages. First, we transferred the texture from a real person which avoided manually generating realistic and diverse textures. Through this method, we generated a texture library of forty thousand samples using trial users (different from test-time users). Second, since we segmented the color images using a trained segmentation model, we did not have to include additional segmentation noise augmentation30 during training. This is in contrast to the existing methods21,30 that add segmentation noise to the synthetic image in order to simulate the noisy segmentation output during test-time. We used the segmented image as input to MeasureNet instead of a textured color image to force MeasureNet to not use any lighting or background-related information from the synthetic training data which can have different distributions during training and testing. In Supplementary Note 8, we show that training a model with textured color image generalizes poorly when tested on real examples as compared to segmented images. Intuitively, we believe this is the case because synthetic textured color images lack realism on their own, but generate realistic segmentation results when passed through a semantic segmentation model.

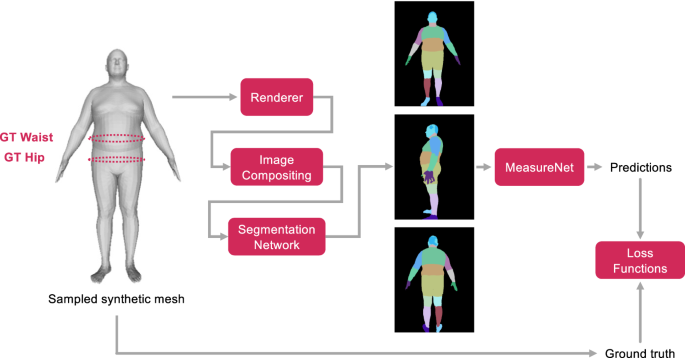

Overall, the texture transfer process consisted of two steps. First, we created a texture library by extracting textures from real images using our participant pipeline. We extracted around forty thousand texture images from trial users. Second, given the texture images, we rendered a randomly sampled synthetic mesh using a random texture image, rendered it on a random background, and passed it through the segmentation. The process of realistic textured rendering by transferring the texture from a real person (synthetic in this case) is shown in Fig. 6. The renderings when segmented (using fixed segmentation network) were used as input to train MeasureNet. The end-to-end training process for MeasureNet is shown in Fig. 7. The ground truth targets used to train MeasureNet are extracted from sampled synthetic mesh.

Generation of realistic color mesh renderings by transferring texture from a real person (synthetic in this example). The renderings when segmented using a fixed network are used as input to train MeasureNet. The ground truth targets used to train MeasureNet are extracted from sampled synthetic mesh.

Training of MeasureNet model using realistic synthetic data. Given a sampled synthetic mesh, realistic synthetic images are generated that are segmented. The segmented images are used as input to MeasureNet and corresponding predictions are compared against the ground truth extracted from synthetic mesh.

Statistical methods

The accuracy of MeasureNet and self-measurements were compared to trained staff-measured ground truth estimates in the CSD using mean absolute error (MAE; Eq. 2) and mean absolute percentage error (MAPE; Eq. 3) metrics. MAE calculates the average relative error of MeasureNet’s prediction or self-measurements with respect to the ground truth tape measurements. MAPE is similar to MAE but calculates mean relative percentage error.

$${MAE}=\frac{{\sum }_{i=1}^{n}\left|{G}_{i}-{P}_{i}\right|}{n}$$

(2)

$${MAPE}=\frac{100 \% }{n}\mathop{\sum }\limits_{i=1}^{n}\left|\frac{{G}_{i}-{P}_{i}}{{P}_{i}}\right|$$

(3)

Gi is the ground truth, Pi is the prediction, and n is the number of users. MAE was also used for comparing MeasureNet to other state-of-the-art approaches for estimating circumferences and WHR.

The same procedures were used for evaluating noise in staff measurements, MeasureNet predictions, and self-measurements. This analysis is used to compare the measurement noise of flexible tape-based measurements (staff and self) and MeasureNet’s predictions. Noise was estimated by plotting histograms of the between-measurement or prediction differences (meas1 and meas2). Biases in differences were removed before plotting the histograms by including the Δs in both directions: meas1 – meas2 and meas2 – meas1. We also fit Gaussian curves on the resulting histograms to estimate the noise standard deviations.

Repeatability was computed on the synthetic dataset and measured as the mean and 90th percentile (P90) of absolute differences. The repeatability metric was computed using the following steps: (1) The mean estimate (µ) was computed for each session consisting of renderings where the same synthetic mesh is rendered given different camera parameters, different body poses, and placed in front of a random background (2) for each scan we computed the absolute difference to the mean of that session (|pred−µ|), and (3) we computed the mean and P90 of absolute differences across all scans.

Ethics review

The participant data evaluated in this study is approved by PBRC Institutional Review Boards (clinicaltrials.gov identifier: NCT04854421). The reported investigation extends the analyses to anthropomorphic data (waist and hip circumference measurements), and reflects a secondary analysis of data collected by Amazon vendors in commercial settings. All participants signed consents in these no-risk studies that granted full permission to use their anonymized data. The investigators will share the data in this study with outside investigators upon request to and approval by the lead author.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

[ad_2]

Source link